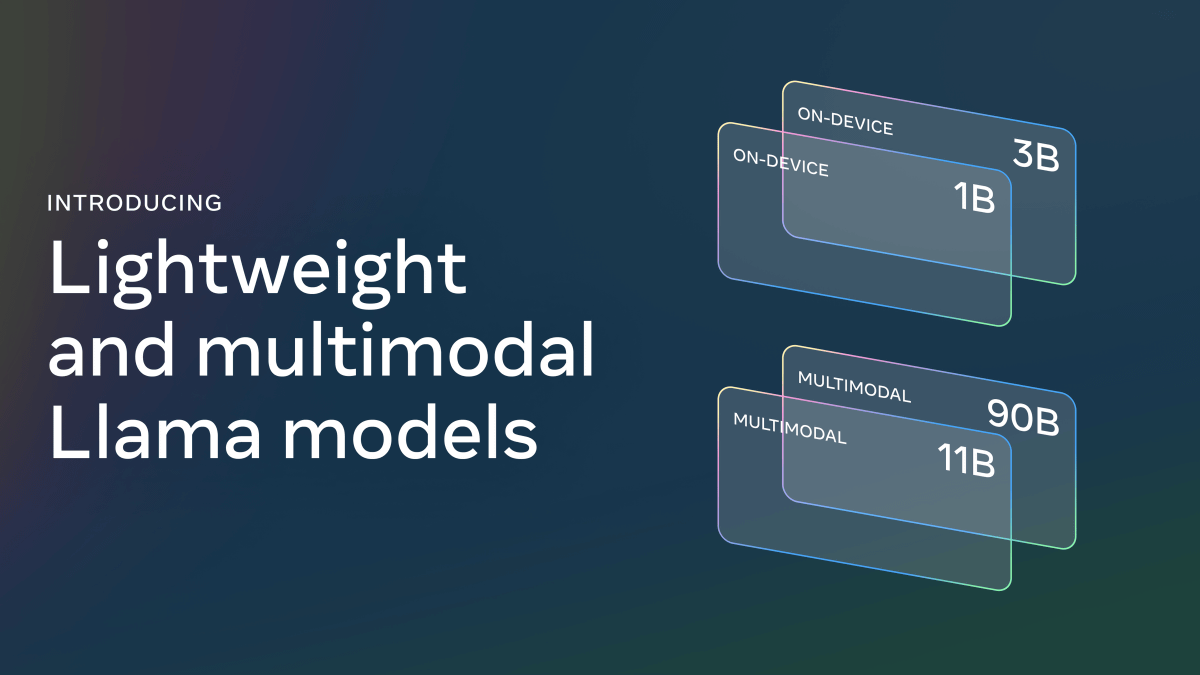

Llama 3.2 is a new large language model (LLM) from Meta, designed to be smaller and more lightweight compared to Llama 3.1. It includes a range of models in various sizes, such as small and medium-sized vision models (11B and 90B) and lightweight text models (1B and 3B). The 1B and 3B models are specifically designed for use on edge devices and mobile platforms.

Llama 3.1, which was launched last July, is an open-source model with an extremely large parameter count of 405B, making it difficult to deploy on a large scale for widespread use. This challenge led to the development of Llama 3.2.

Llama 3.2’s 1B and 3B models are lightweight AI models specifically designed for mobile devices, which is why they only support text-based tasks. Larger models, on the other hand, are meant to handle more complex processing on cloud servers. Due to the smaller parameter count, the 1B and 3B models can operate directly on-device, capable of handling up to 128K tokens (approximately 96,240 words) for tasks like text summarization, sentence rewriting, and more. Because the processing occurs on-device, it also ensures enhanced data security, as user data remains on their own devices.

Meta’s latest Llama 3.2 models are taking a leap forward in AI technology, especially for mobile and on-device applications. The 1B and 3B models, specifically, are designed to run smoothly on hardware like smartphones or even on SoCs (System on Chips) from Qualcomm, MediaTek, and other ARM-based processors. This opens up new possibilities for bringing advanced AI capabilities directly to your pocket, without needing a powerful server.

Meta revealed that the Llama 3.2 1B and 3B models are actually optimized versions of the larger Llama 3.1 models (8B and 70B). These smaller models are created using a process called “knowledge distillation,” where larger models “teach” the smaller ones. The output of the large models is used as a target during the training of the smaller models. This process adjusts the smaller models’ weights in such a way that they maintain much of the performance of the original larger model. In simple terms, this approach helps the smaller models achieve a higher level of efficiency compared to training them from scratch.

For more complex tasks, Meta has also introduced the larger Llama 3.2 vision models, sized at 11B and 90B. These models not only handle text but also have impressive image-processing capabilities. For example, the mid-sized 11B and 90B models can be applied to tasks like understanding charts and graphs. Businesses can use these models to get deeper insights from sales data, analyzing financial reports, or even automating complex visual tasks that go beyond just text analysis.

With Llama 3.2, Meta is pushing the boundaries of AI, from mobile-optimized, secure, on-device processing to more advanced cloud-based visual intelligence.

In its earlier versions, Llama was primarily focused on processing language (text) data. However, with Llama 3.2, Meta has expanded its capabilities to handle images as well. This transformation required significant architectural changes and the addition of new components to the model. Here’s how Meta made it possible:

1. Introducing an Image Encoder: To enable Llama to process images, Meta added an image encoder to the model. This encoder translates visual data into a form that the language model can understand, effectively bridging the gap between images and text processing.

2. Adding an Adapter: To seamlessly integrate the image encoder with the existing language model, Meta introduced an adapter. This adapter connects the image encoder to the language model using cross-attention layers, which allow the model to combine information from both images and text. Cross-attention helps the model focus on relevant parts of the image while processing related textual information.

3. Training the Adapter: The adapter was trained on paired datasets consisting of images and corresponding text, allowing it to learn how to accurately link visual information to its textual context. This step is crucial for tasks like image captioning, where the model needs to interpret an image and generate a relevant description.

4. Additional Training for Better Visual Understanding: Meta took the model’s training further by feeding it various datasets, including both noisy and high-quality data. This additional training phase ensures that the model becomes proficient at understanding and reasoning about visual content, even in less-than-ideal conditions.

5. Post-Training Optimization: After the training phase, Llama 3.2 underwent optimization using several advanced techniques. One of these involved leveraging synthetic data and a reward model to fine-tune the model’s performance. These strategies help improve the overall quality of the model, allowing it to generate better outputs, especially when dealing with visual information.

With these changes, Meta has evolved Llama from a purely text-based model into a powerful multimodal AI capable of processing both text and images, broadening its potential applications across industries.

When it comes to Llama 3.2’s smaller models, both the 1B and 3B versions show promising results. The Llama 3.2 3B model, in particular, demonstrates impressive performance across a range of tasks, especially on more complex benchmarks such as MMLU, IFEval, GSM8K, and Hellaswag, where it competes favorably against Google’s Gemma 2B IT model.

Even the smaller Llama 3.2 1B model holds its own, showing respectable scores despite its size, which makes it a great option for devices with limited resources. This performance highlights the efficiency of the model, especially for mobile or edge applications where resources are constrained.

Overall, the Llama 3.2 3B model stands out as a small but highly capable language model, with the potential to perform well across a variety of language processing tasks. It’s a testament to how even compact models can achieve excellent results when optimized effectively.